The Government Shutdown & Healthcare's Increasing Costs

With the government shut down over the democrats' attempt to reinstate the COVID-era Affordable Care Act (ACA) health insurance subsidies, a lot of people have been questioning the health insurance system in this country. For me, this debate has spurred a couple of important points that aren't often talked about.

For context, we're not talking about Medicaid patients, Medicare patients, or patients who receive healthcare through their employer. These are patients who don't have access to those programs and decide to buy insurance on the public ACA exchanges. Once a year, these people go to the exchanges and choose a plan that works for them (around 24M people). Then, up to a certain threshold, the government will subsidize some of this expense. The democrats were able to expand the size of those subsidies as part of an emergency order during COVID that expires at the end of this year. The challenge is that the cost of this healthcare has gone up significantly since COVID, making these plans even more difficult to afford. The democrats are refusing to fund the government unless the Republicans agree to extend the COVID-era subsidy.

How insurance works

To better understand what's happening, let's level-set on what health insurance is for a moment. Health insurance, like any other insurance, works pretty simply. Health insurers pool money from a group of people to cover the costs incurred by those receiving healthcare services. Everyone pays into the pool, and when someone has a healthcare need, the insurer pays all or part of the cost. The key driver of any insurance pool is the actuary, who analyzes the risk associated with the pool of people and sets an annual premium that each person must pay into the pool. Setting this premium can be tricky because you're optimizing for three things: 1/ ensuring the premium is high enough such that the insurer can cover the pool's healthcare expenses, 2/ ensuring that the premium is affordable enough so lots of healthy people participate in the pool and 3/ ensuring that there's enough left over for the insurer to cover the costs of running the health plan (this last amount is capped at 15%-20%). While it's not easy, an actuary can fairly reliably set premiums while managing those tradeoffs.

Preexisting conditions without a mandate

But there's a big wrinkle with ACA plans: they must cover preexisting conditions*, and patients can sign up whenever they want during the ~3-month open enrollment period. This radically changes the incentives for the typical patient. Many young people aren't getting regular healthcare services, so they really only need insurance in the event of some kind of catastrophic illness or event. So, rationally, many patients who are healthy and don't need healthcare services aren't joining the pool (why bother paying a premium when you can just wait for if and when you get sick and start paying then?). Meanwhile, the patients who do require lots of healthcare services are happy to sign up and pay the premium to make sure they don't have to pay for services out of pocket. The result of all of this, of course, is that the insurance pool is disproportionately made up of sicker patients. So the actuary has to set the premium higher to cover all of the costs. This then makes the plans even more unattractive to a healthy patient, and the situation gets worse. The initial spirit of the ACA was that it would cover preexisting conditions only if there was a mandate for everyone who falls into this group to buy insurance (this would have forced healthier patients into the plan, lowering the cost for everyone). That was the deal. The insurers would cover preexisting conditions as long as there was a mandate. That mandate was in place until 2017 when the Republican Congress abolished it, spiking the cost of the plans (though some states still enforce it).

Requiring people to have health insurance is obviously a thorny topic. Critics will say it infringes on personal freedoms, and I suppose it does. The problem with this argument is that we're already infringing on personal freedoms. The Emergency Medical Treatment Act requires US hospitals to provide stabilizing treatment to any patient regardless of ability to pay, which gets passed onto every other patient in the form of higher hospital bills and then passed onto the insurance pool when that patient, who now has a preexisting condition, finally decides it's worth signing up for insurance. That's an infringement of its own.

So the result of all of this is that the ACA plans are fundamentally broken (sick patients are incentivized to join the pool while healthy patients are not). Congress has put a Band-Aid on all of this by subsidizing the premiums using tax dollars, which, of course, just get more and more controversial as the cost of healthcare continues to rise. Neither side seems particularly interested in fixing such a fundamentally problematic issue.

The changing definition of "healthcare"

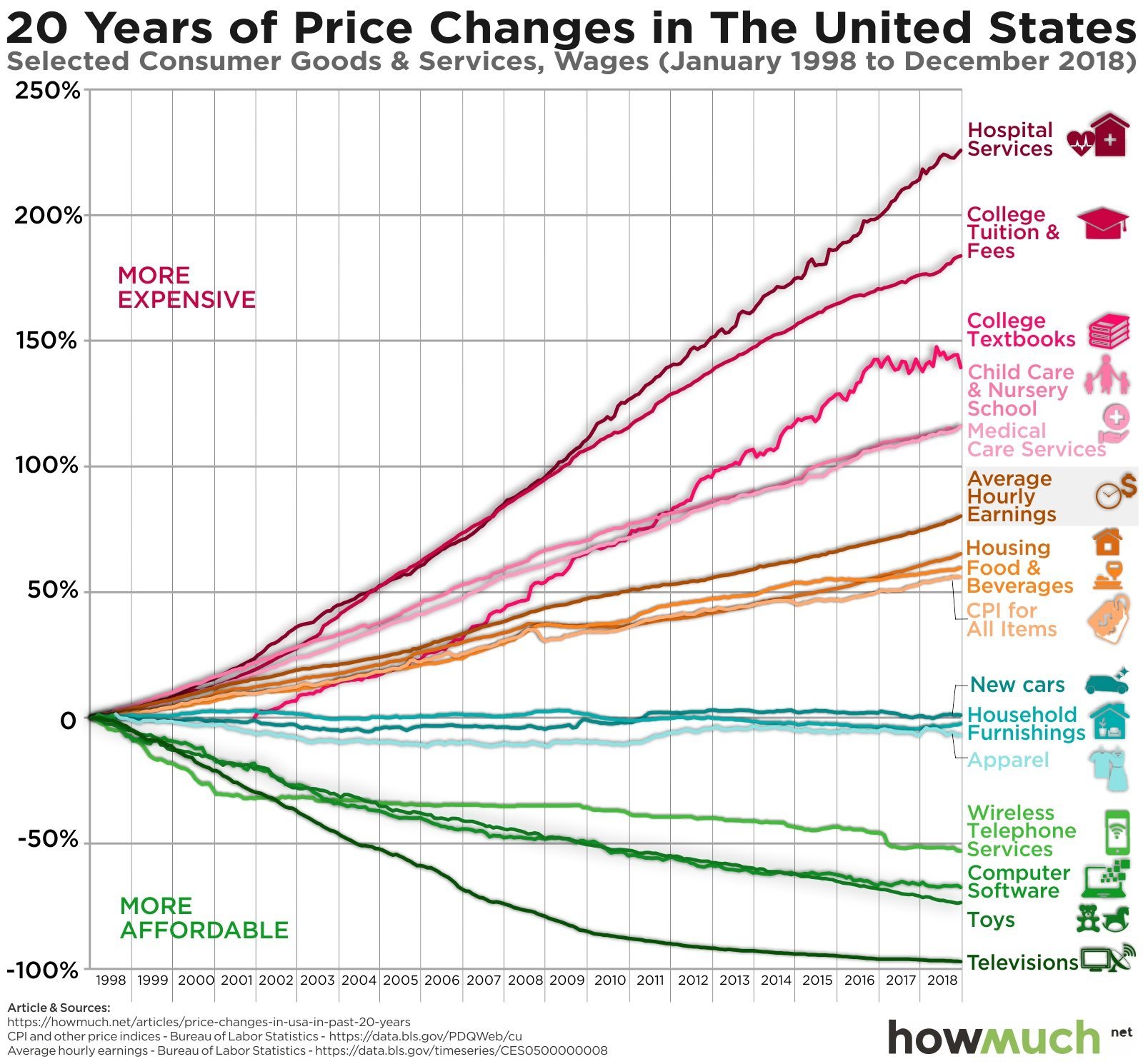

Beyond the fact that the structure of the plans is broken, the cost of insuring patients in general continues to rise. It's worth thinking about this from a first principles perspective for a moment.

It's often said that "healthcare is a human right". Whenever I hear this, I always think to myself, "What do you mean by healthcare?".

If I asked you this in 1925, you would've meant basic exams from a physician, childbirth, and limited antibiotics. If I asked you in 1975, you would've added specialty care, inpatient surgeries, ER treatment, lab tests, and generic drugs. If I asked you in 2025, you'd add advanced diagnostics, integrated care teams, robotic-assisted procedures, MRIs, CT scans, genetic & molecular testing, wide-ranging specialty drugs, substance abuse programs, and wellness services.

The point is that healthcare changes significantly over time, both in terms of the services available and the expectations of the availability of those services. Said differently, over time, healthcare gets a lot more expensive. Of all the complex things surrounding the cost of healthcare, this one is perhaps the easiest to understand. I read the other day that premiums are up 27% this year on average, largely due to massive demand for Ozempic and other GLPs that many plans have decided to cover. How we define "healthcare" changes over time, and it only goes in one direction.

Back to the actuary working for the insurance company. This person is watching all of this change happen as more expensive healthcare services become available and patients' expectations rise. But the actuary is still limited by the same constraints. They need to set a rate that's low enough to pull in the healthy people but high enough to cover all of these great services. This is where the actuary's job gets difficult, and you start to realize that, in a way, the insurance company really gets paid to be the bad guy. They manage this tradeoff by deciding what they should and shouldn't cover (which is obviously enormously controversial) while trying to maintain a reasonable premium that pulls people into the pool.

And then, on top of that, the government subsidizes these premiums, which exacerbates the problem as the equilibrium premium price that would bring the ideal number of patients into the pool can be increased by the insurer, increasing the overall cost of the plan.

Avoiding the tradeoffs

There's always lots of talk about the increase in the cost of insurance, but we don't couple it with an acknowledgement of the increase in stuff we're getting access to and the tradeoff associated with that. We seem to be stuck in a rut of assuming we should get instant access to any new service that emerges. We then get access to those services. We then see our premiums go up. And then we complain about it. We ignore the obvious tradeoff being made and blame the insurance companies when they, as a last resort, have to manage it.

This comes back to my point around what we mean by healthcare being a human right. Are we talking about healthcare in 1925, 1975, or 2025? Because those are very, very different things with very different price points. Obviously, no politician is going to advocate only covering the services that were offered in 1925, so the tradeoffs of instant access to amazing healthcare at a reasonable cost are completely ignored.

The cost of healthcare debate is a lot more complicated than simple greed or inefficiency. It's about very basic and fundamental structural tradeoffs associated with ever-expanding services and patient expectations, and the avoidance of hard decisions at the policy level. Hopefully, we'll look back at the shutdown as the start of an enormously important and long-overdue set of conversations.

*Note: Employer-sponsored plans also must cover preexisting conditions, though they don’t face the same adverse selection outcomes that the ACA plans do, as these people are employed and are likely under less financial pressure, and they’re more likely to join when they join the company, as opposed to when they get sick.